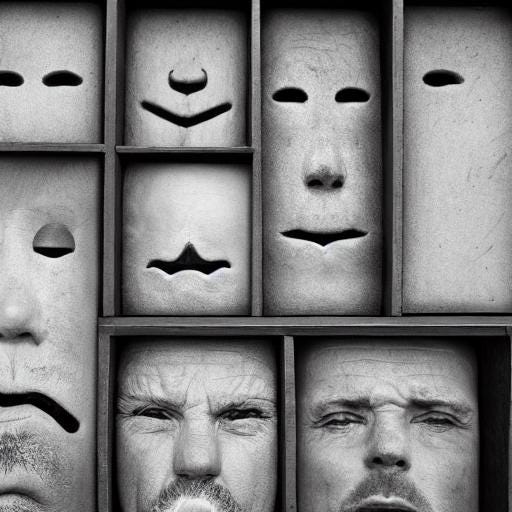

When I used GenAI to create graphics for my SubStack, Deeply Boring, a recurring frustration was images that made no sense. The “multi-faced man” in the lower left of my introductory post “On Poverty and Porosity” is an example. Using the prompt “a drawer divided into compartments with faces in them”, I sought to convey the idea that we put on different faces based on how we choose to show up in separate life spheres. But it kept on generating “heads” that featured multiple mouths and noses. After repeated attempts, I kept the image as an Easter Egg, with a post such as this in mind.

Curiously, I would never have done this with a human artist - to adopt someone’s work with the intent of future public shaming. As a society, we frown upon such trespasses (or used to). An English statute dating to 1843 makes libel punishable by two years in jail. But robots don’t have feelings. Or do they? Microsoft explains that “using polite language [with Microsoft Copilot] sets a tone for the response…when it clocks politeness, it’s more likely to be polite back”.

Interesting. The notion that being polite to AI may invite reciprocal politeness, and is also “good practice for interacting with humans” (Microsoft) seems harmless. But it’s also superficial, because politeness is practiced civility. We all have the ability to be polite, even to people we truly dislike, if we want to. That’s not what matters. The inner voicing and psychological mechanisms of how we truly feel about someone we intensely dislike - and how it influences our behavior - doesn’t happen at the surface level of social etiquette. The outer layer is performance. The inner layer is character. Can (or should) our interactions with AI shape our character?

When I interact with people I respect - my parents, teachers, mentors, peers, my children, my spouse - I crave their acceptance and approval. This desire plays a significant role in how I respond to their feedback. If they think my plan is a bad idea, I will revisit my point of view. If they feel I can “do better for myself”, that may encourage me to stretch. But likewise, if they are disappointed with choices I’ve made, I will pause and reflect deeply. The difference in perspective will induce tension and stir up complicated feelings such guilt, conflict, or spite. But it could also shape me for the better. This is how we grow as human beings - through a continual stream of interactions that are both constantly resetting the boundaries of what we want to be and, if we are fortunate, also supplying the courage to strive for them.

But what would happen if we increase our quotient of interaction and dependence on digital helpers that cannot be disappointed in us? After all, it’s not unrealistic to conceive of our children growing up with “access” to the collected wisdom of humanity, delivered on-tap and at scale through synthesized intelligence. LLMs can advise us on career choices, relationships, social graces, professional etiquette, and even questions of moral import (face it, to some people “should I tip my dry cleaner?” isn’t that far removed from “should I break up on TikTok?”). My fear is that the disappearance of disappointment from these interactions changes the means by which the human mind is curated and human character is developed.

We are all familiar with how anonymity has ruined the digital public square - the slippery willingness to say things that we would not say to someone’s face or in the presence of mom. But I think this is merely symptomatic of something bigger. For over fifty years, we have underemphasized the vital role of healthy, loving disapproval as a means of constructive edification. And in so doing, we have surrendered interpersonal accountability for becoming better versions of ourselves. As a species we risk becoming smarter without becoming wiser. Is this not how we come to find ourselves in a world where swiping on Tinder passes for courtship? They could not be more different. One is brutal reductionism. The other is discovery of self through another.

Another way of stating this is as follows. When we interact with a chatbot, it is an information transaction (“tell me X”). When we interact with a human, it is both an information transaction and a relational interaction (“please spare the time to give me advice…because I trust you”). If we think of these only as information transactions, we may err in treating them as substitutable. But in reality, they are not. If we substitute enough human advisors with digital advisors, two things are lost: first, the relational interaction, and the resulting expectation, motivation and bonding that comes from a life lived together; and second: the ability to practice, improve, observe, emulate, learn, and pass along the skills that bond our lives together.

Am I alone in feeling we desperately need to preserve this marriage of information and relation - delivered through person-to-person conversations at kitchen tables, community centers, and places of worship? I can only disappoint someone who expects better of me. But if there is nobody to be disappointed in me, what is the societal actualization mechanism for my self-improvement? If we let slip away this path to self-improvement, are we better equipped to increase human flourishing in the world?

J

P.S. I can’t believe it’s been 23 years since 9/11. What have we learned in the near quarter-century since?